Bet Small to Win Big: Efficient 2K Video Generation via Deeper-compression AutoEncoder, Linear Attention and Two-Stage Refiner

Want to have a quick 2k video generation experience? Here are our Code and 720p Demo.

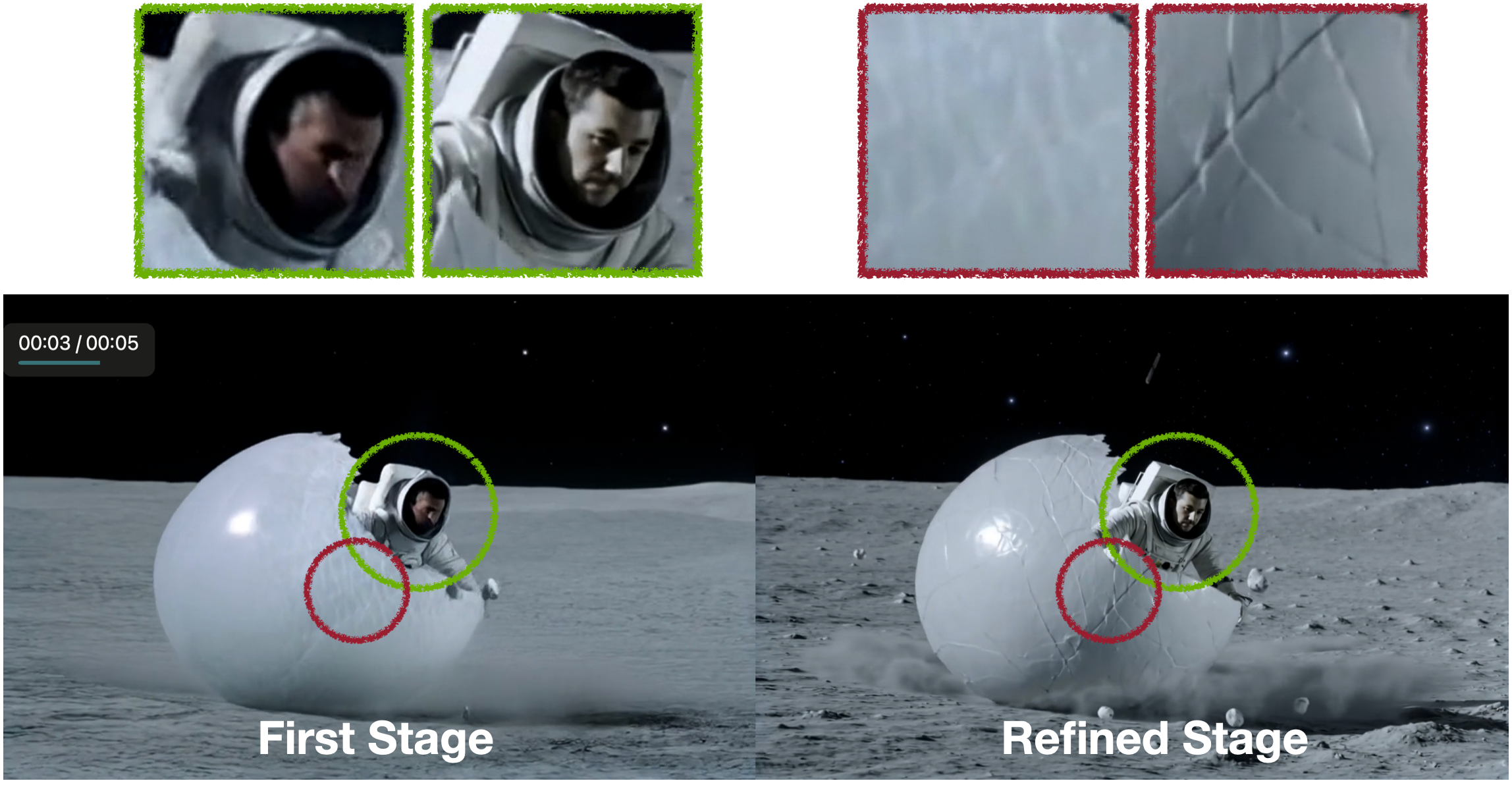

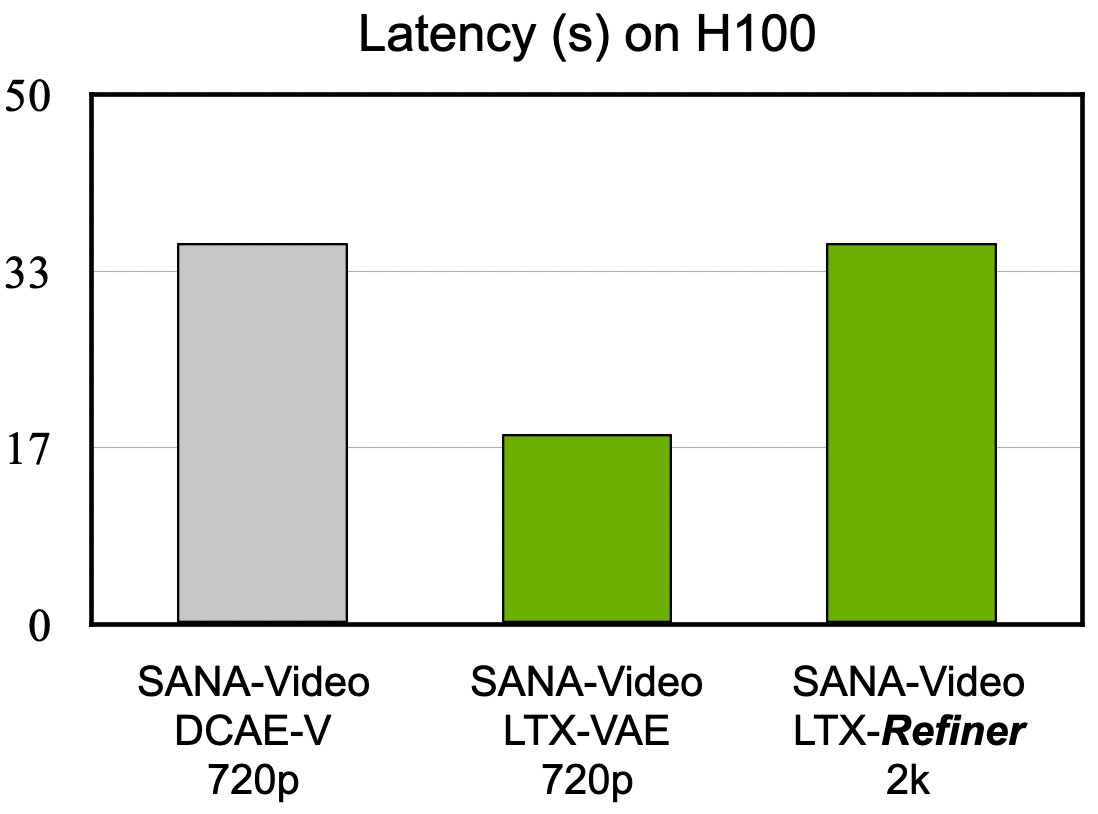

Before detailing the methodology, we present a direct comparison of the generation stages. Figure 1 and Video 1 illustrate the qualitative leap provided by the Refiner. Stage 1 (Left) successfully captures the global motion dynamics but suffers from compression blur. Stage 2 (Right) effectively “translates” this blurry latent into high-fidelity footage. Note the restoration of critical high-frequency details—specifically the distinct facial features inside the helmet and the granular texture of the cracking surface—which are smoothed out in the base generation. Figure 2 previews our end-to-end latency results, showing that the 2K refined pipeline matches the original 720p generation time.

1. Motivation: The Compression-Fidelity Trade-off

The core efficiency of SANA-Video stems from its compact 2B parameter architecture and rapid adaptation capabilities. This is facilitated by utilizing high-compression Variational Autoencoders (VAEs), specifically DCAE-V (\(32 \times 32 \times 4\)) and LTX-VAE (\(32 \times 32 \times 8\)).

These architectures allow for near-frictionless latent processing. However, the compression necessitates a loss of high-frequency spatial information, resulting in a characteristic “blurry” aesthetic in the initial output. Rather than increasing the parameter count of the base model—which would negate our lightweight advantage—we introduce a dedicated Refinement Stage. This stage functions not merely as a denoiser, but as a latent translator, converting the low-frequency “skeleton” generated by the base model into a high-fidelity output using a step-distilled sampler.

Betting Small to Win Big: This approach represents a paradigm shift from “Monolithic Scaling” to “Modular Efficiency.” Instead of training a massive single-stage model to handle both structure and texture—which is computationally expensive—we decouple the problem. A lightweight Small Model (2B) handles the complex temporal dynamics (Structure), while a specialized Step-Distilled Refiner handles the spatial resolution (Texture). This “Small Model + 2-Stage” strategy outperforms traditional large models by delivering 2K quality at 720p latency, effectively allowing us to “bet small” on parameters to “win big” on performance.

2. Performance Analysis

To validate this paradigm, we conducted extensive benchmarking on NVIDIA H100 hardware.

2.1 VAE Architecture Comparison

We evaluated three mainstream VAEs at a resolution of \(704 \times 1280 \times 81\). The focus was on the balance between reconstruction quality (Panda70m metrics) and throughput.

| VAE | Latent Size | Compression Ratio | Enc / Dec Latency (s) | GPU Mem | Panda70m (PSNR↑/SSIM↑/LPIPS↓) |

|---|---|---|---|---|---|

| Wan2.1 (8x8x4, c16) | 88x160x21 | 48 | 2.9s / 5.0s | 18GB | 34.15 / 0.952 / 0.017 |

| DCAE-V (32x32x4, c64) | 22x40x20 | 192 | 5.2s / 5.6s | 47GB | 35.03 / 0.953 / 0.019 |

| LTX2 (32x32x8, c128) | 22x40x11 | 192 | 1.3s / 0.9s | 47GB | 32.41 / 0.928 / 0.039 |

2.2 Latency Analysis: The "Free" Upgrade

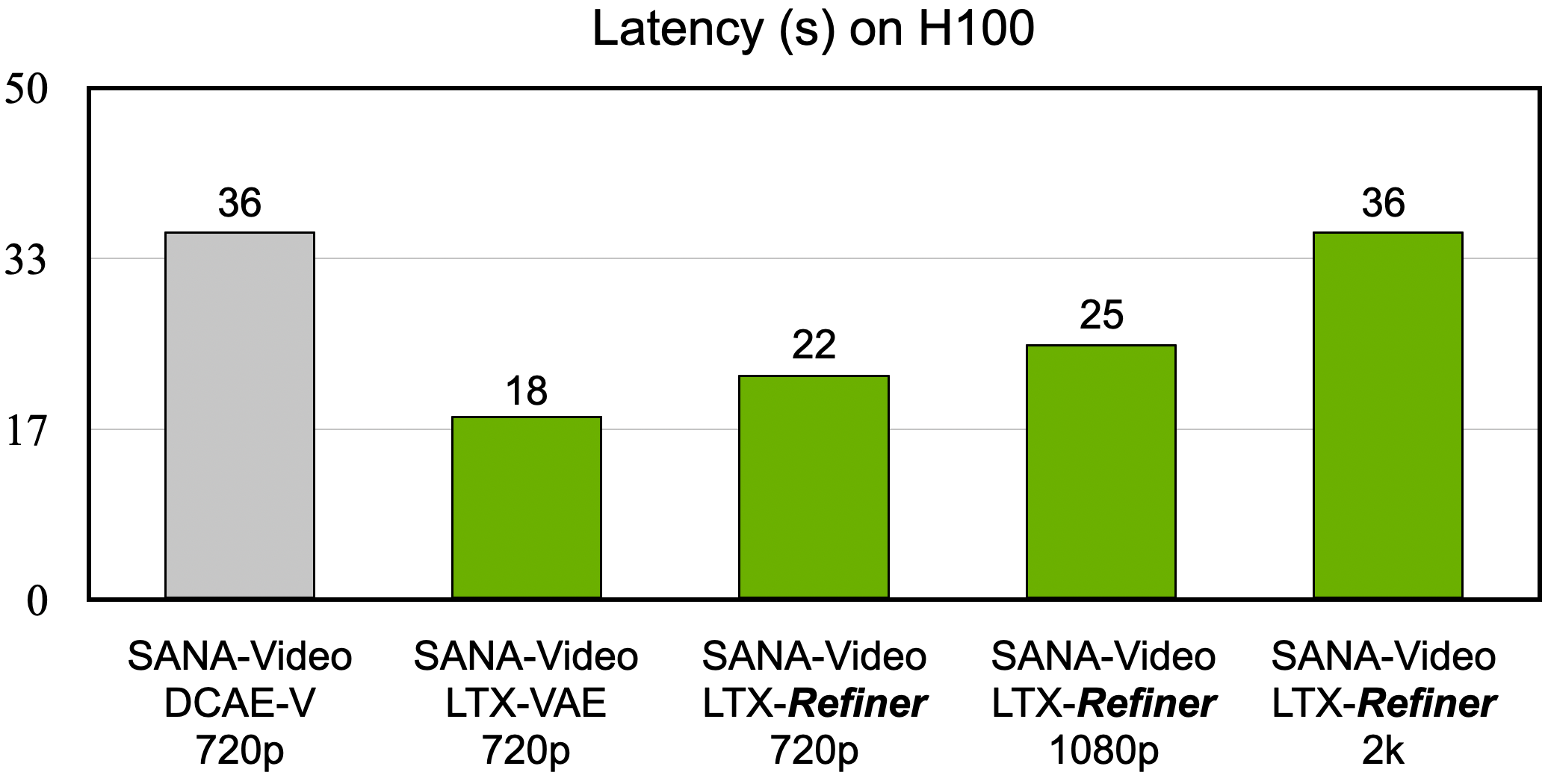

As shown in Figure 3, comparing the total end-to-end latency reveals a compelling result. The efficiency gains from the new LTX-based pipeline allow us to generate 2K video in the same time required for the previous 720p pipeline.

- SANA-Video-1.0 (720p): ~36s

- SANA-Video-LTX (720p): ~18s (50% Efficiency Gain)

- SANA-Video-LTX-Refiner (2K): ~36s (Free Lunch!)

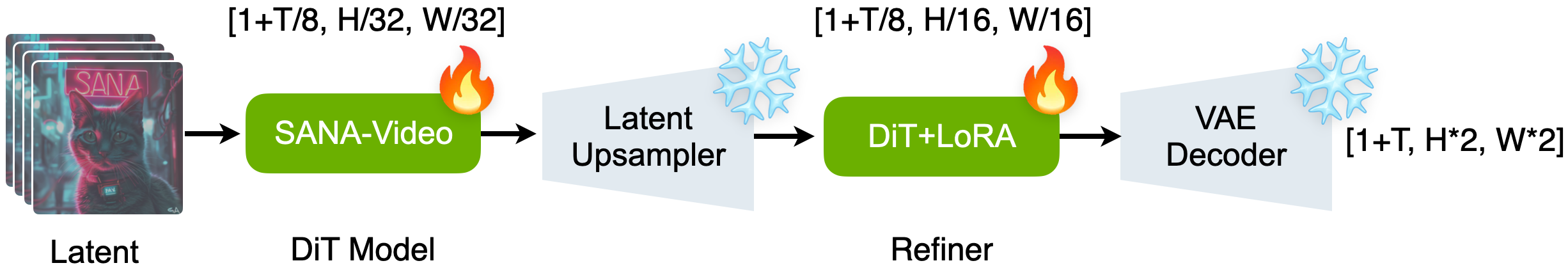

Our upgrade from 720p to 2K is the ultimate free lunch: a massive resolution leap at no cost to user latency. Figure 4 illustrates the complete two-stage inference pipeline.

3. Engineering Implementation

3.1 Post-training Adaptation Strategy

A significant engineering challenge arose from the architectural mismatch between SANA-Video and the LTX2 Refiner. SANA-Video uses a different VAE with different channel dimensions than the LTX system.

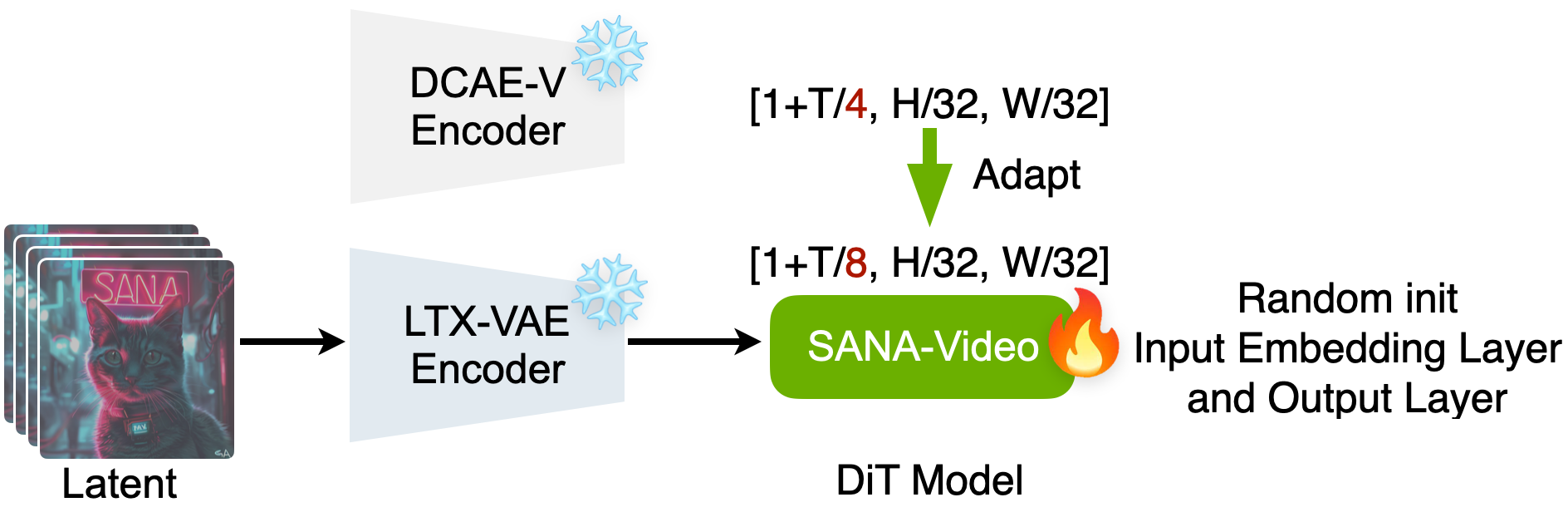

To resolve this, we employed a rapid fine-tuning adaptation strategy on the SANA-Video 2B model. As illustrated in Figure 5, during the VAE adaptation phase, both the original DCAE-V encoder and the target LTX-VAE encoder remain frozen. Only the SANA-Video DiT model is fine-tuned, with its Input Embedding layer and Output layer randomly re-initialized to match the LTX-VAE latent channel dimension (\(c=128\)). This minimal re-initialization preserves the learned motion priors while enabling compatibility with the new latent space:

- Initialization: We randomly initialized only the Patch Embedding layer and the Final Output layer to accommodate the LTX-VAE latent channels (\(c=128\)).

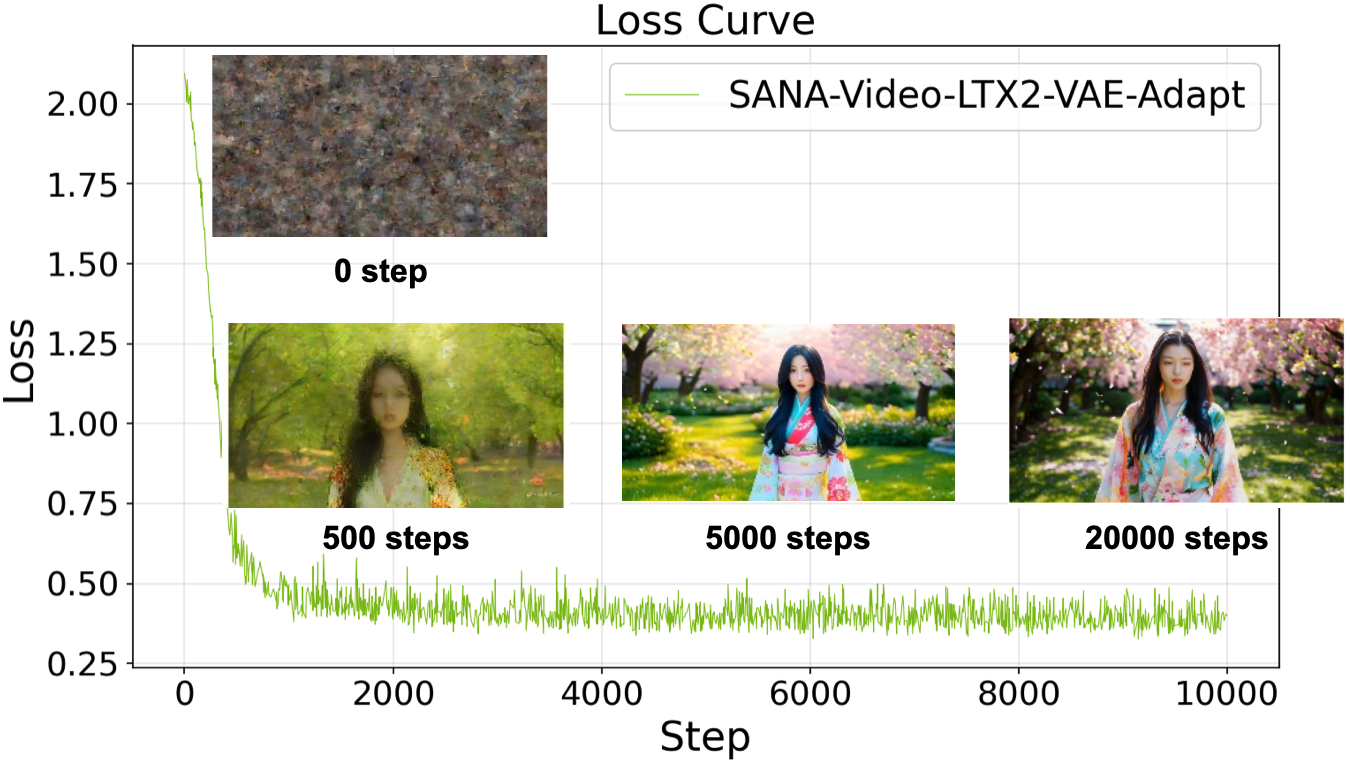

- Convergence: Training logs indicate that semantic information is recovered within 500 steps, with full color and texture restoration achieved by 5,000 steps, as shown in Figure 6.

This decoupling allows SANA-Video to handle the heavy lifting of motion logic (Structure), while the LTX2 Refiner handles the “rendering” (Texture), creating a universal, plug-and-play enhancement pipeline.

3.2 The Step-Distilled Refiner

The LTX2 Refiner in Stage 2 utilizes Step Distillation, enabling it to operate without a full denoising trajectory. Instead, it inherits the knowledge distribution of the teacher model to inject high-frequency textures into the Stage 1 output in just 4 steps. This design ensures the marginal cost of the second stage remains negligible.

Crucially, this model is not a standalone generator. As illustrated in Video 2, our experiments initializing generation from pure Gaussian noise using the 4-step schedule resulted in severe structural collapse and semantic incoherence. Consequently, the Refiner’s function is strictly defined as Latent Adaptation and Enhancement—it requires the structural prior provided by the Stage 1 output to perform a high-fidelity translation, rather than generating content from scratch.

3.3 Disable the Audio Branch via Zero-Initialization

Since the LTX2 architecture employs a dual-branch DiT for joint video and audio generation, the Stage 2 refiner inherently anticipates concurrent inputs for both Video and Audio Latents. However, current open-source video models, including SANA-Video, typically lack audio generation capabilities. The adaptation of the LTX refiner for such video-only architectures remains an under-explored challenge.

We propose a practical solution to bypass this dependency. We strictly initialize the audio input as a zero-tensor within the refiner. Empirically, we found that injecting noise at the default scale within the scheduler effectively simulates the necessary latent distribution. This strategy allows us to leverage the refiner's visual enhancement capabilities without requiring an additional audio signal or a pre-existing audio branch.

4. Conclusion & Future Work

This update demonstrates the potential of video generation with workflow decoupling rather than with monolithic scaling. The Two-Stage Inference paradigm—“Fast Structure” followed by “Beautiful Detail”—solves the high-compression artifact issue while maintaining SANA-Video's signature speed.

Future Roadmap: We are optimizing the training scheme for the refiner and plan to open-source the complete lightweight refiner training workflow to the community.

Citation

If you find our work helpful, please consider citing:

@article{sana_video_with_refiner,

title={Bet Small to Win Big: Efficient 2K Video Generation via Deeper-compression AutoEncoder, Linear Attention and Two-Stage Refiner},

author={SANA-Video Team},

year={2026},

month={Feb},

url={https://nvlabs.github.io/Sana/Video/blog/blog.html}

}

References

1. LTX-2: Efficient Joint Audio-Visual Foundation Model

2. Waver: Wave Your Way to Lifelike Video Generation

3. FSVideo: Fast Speed Video Diffusion Model in a Highly-Compressed Latent Space

4. SANA-Video: Efficient Video Generation with Block Linear Diffusion Transformer