Deep Learning for Content Creation Tutorial

In conjunction with CVPR 2019

Goal of the tutorial

Content creation has several important applications ranging from virtual reality, videography, gaming, and even retail and advertising. The recent progress of deep learning and machine learning techniques allows to turn hours of manual, painstaking content creation work into minutes or seconds of automated work. This tutorial has several goals. First, it will cover some introductory concepts to help interested researchers from other fields get started in this exciting new area. Second, it will present selected success cases to advertise how deep learning can be used for content creation. More broadly, it will serve as a forum to discuss the latest topics in content creation and the challenges that vision and learning researchers can help solve.

Organizers:

Deqing Sun, Ming-Yu Liu, Orazio Gallo, and Jan KautzSponsors:

Date, time, and location:

See you on Sunday June 16th at 8:45 in room 204.Recordings

You can now see all the talks here, or click on the video of one specific talk below! Unfortunately, there were some technical glitches and a couple of videos are only partial, we apologize for the inconvenience.News

- We have a great line-up of confirmed speakers, see program below!

- The tutorial was very successful, we would like to thank the speakers and the audience again!

Tentative program

| Talk Title | Speaker | |

| 8:45 - 9:00 | Opening words [Video] | Deqing Sun |

| 9:00 - 9:45 | Paired and Unpaired Image Translation with GANs [Video] [Slides] | Phillip Isola |

| 9:45 - 10:10 | Improving Image Translation [Video][Slides] | James Tompkin |

| 10:10 - 10:25 | Coffee break (15m) | |

|---|---|---|

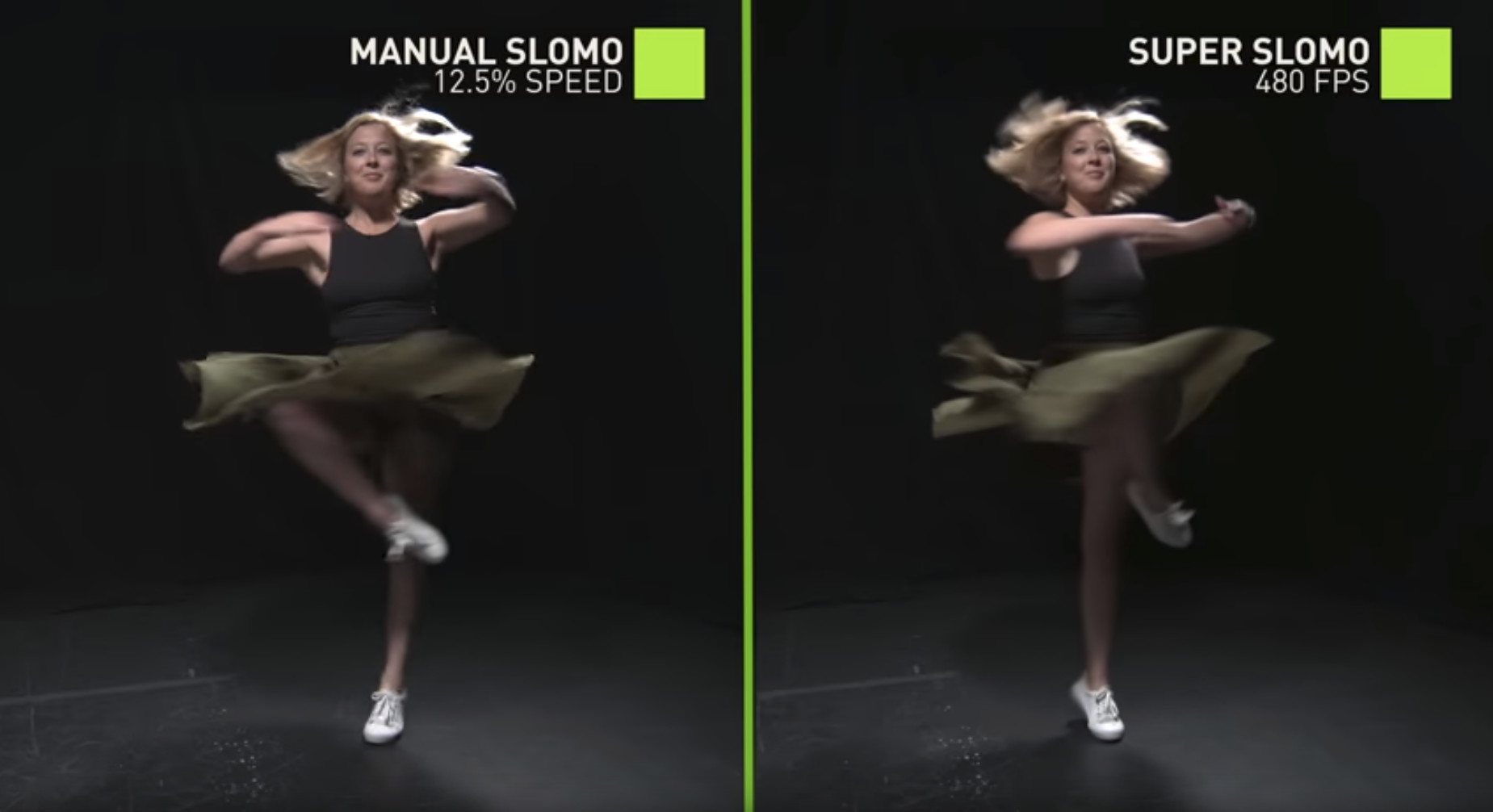

| 10:25 - 10:50 | Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation [Video] | Huaizu Jiang |

| 10:50 - 11:15 | FUNIT: Few-Shot Unsupervised Image-to-Image Translation [Video] | Ming-Yu Liu |

| 11:15 - 11:40 | A Style-Based Generator Architecture for Generative Adversarial Networks [Video] [Slides] | Tero Karras |

| 11:40 - 12:05 | Meta-Sim: Learning to Generate Synthetic Datasets [Video] | Sanja Fidler |

| 12:05 - 13:20 | Lunch break (1h 15m) | |

| 13:20 - 13:45 | GauGAN: Semantic Image Synthesis with Spatially Adaptive Normalization [Video] [Slides] | Taesung Park |

| 13:45 - 14:10 | Style Transfer: Application to Photos and Paintings [Video] | Sylvain Paris |

| 14:10 - 14:35 | vid2vid: Video-to-Video Synthesis [No Video] | Ting-Chun Wang |

| 14:35 - 14:50 | Coffee break (15m) | |

| 14:50 - 15:15 | Visualizing and Understanding GANs [Video] | Jun-Yan Zhu |

| 15:15 - 15:40 | Content Creation by Inserting Objects [Video] [Slides] | Sifei Liu and Donghoon Lee |

| 15:40 - 16:05 | Recent Advances in Neural and Example-based Stylization [Video] | Eli Shechtman |

| 16:05 - 16:20 | Coffee break (15m) | |

| 16:20 - 17:30 | Panel discussion with Phillip Isola, Jun-Yan Zhu, Huaizu Jiang, and James Tompkin | |