Volumetric video, which encodes a time-varying 3D scene into a unified representation for novel-view synthesis of dynamic contents, has long been a grand challenge for achieving immersive experiences. High-quality volumetric video enables new and immersive applications, such as 3D video conferencing, 3D telepresence, and virtual tutoring in XR. Recent volumetric representation enables fast and high-quality reconstruction of dynamic 3D scenes.

However, generating free-viewpoint videos, either from real-world data or synthetic generators, presents numerous challenges. Firstly, reconstructing dynamic events with high visual quality at interactive training and playback latencies requires large amount of computation and costly storage sizes to encode relevant details. Domain-specific applications of volumetric videos present further challenges. For instance, capturing and synthesizing humans at high fidelity are highly challenging due to the intricate details of geometry and appearance of humans. Reconstructing indoor scenes necessitates high-fidelity synthesis of challenging objects and materials present in the real world. By contrast, reconstruction of urban environments requires unifying large spatial areas captured under varying illuminations and camera resolution for tasks such as mapping or autonomous driving. To address the challenge of costly multi-view camera setups, synthesis from sparse or monocular camera views would enable broader creation and dissemination of volumetric video. Finally, as volumetric video generation becomes more efficient, new directions explore 4D content as an intermediate representation in multi-modal models, such as for answering questions about autonomous driving. While these methods are still under active research, they all capture different aspects of how to generate or leverage volumetric video representations for practical applications or domains.

As such, our tutorial summarizes practical challenges towards generating and distributing volumetric video in the real world. Given the applicability of 4D priors to a range of computer vision tasks and the growing use of 3D and 4D contexts to represent dynamic scenes, we hope this tutorial inspires researchers to develop approaches that leverage volumetric video for broadening use cases.

Agenda

| Category | Title | Speaker | Video | Session Time | |

|---|---|---|---|---|---|

| Introduction | Welcome and Introduction | Amrita Mazumdar , Tianye Li | Video | 9:00 am - 9:30 am | |

| New Directions & Applications | Generating Volumetric Video from Monocular Inputs | Aleksander Holynski | Video | 9:30 - 10:30 am | |

| Dynamic 4D Humans | Lan Xu | Video | 10:30 - 11:30 am | ||

| Methods & Techniques | Immersive & Dynamic Spaces | Christian Richardt | Video | 11:30 - 12:30 pm | |

| Lunch Break | Video | 12:30 - 1:30 pm | |||

| New Directions & Applications | Volumetric Video in Autonomous Vehicles | Boris Ivanovic | Video | 1:30 - 2:30 pm | |

| Methods & Techniques | Compression and Standardization of Gaussian Splats | Yiyi Liao | Video | 2:30 - 3:30 pm | |

| Towards efficient real-time streaming of volumetric video | Sharath Girish | Video | 3:30 - 4:30 pm | ||

| Panel | Panel Discussion, Q&A, Closing Remarks | Amrita Mazumdar , Tianye Li | Video | 4:30 - 5:00 pm |

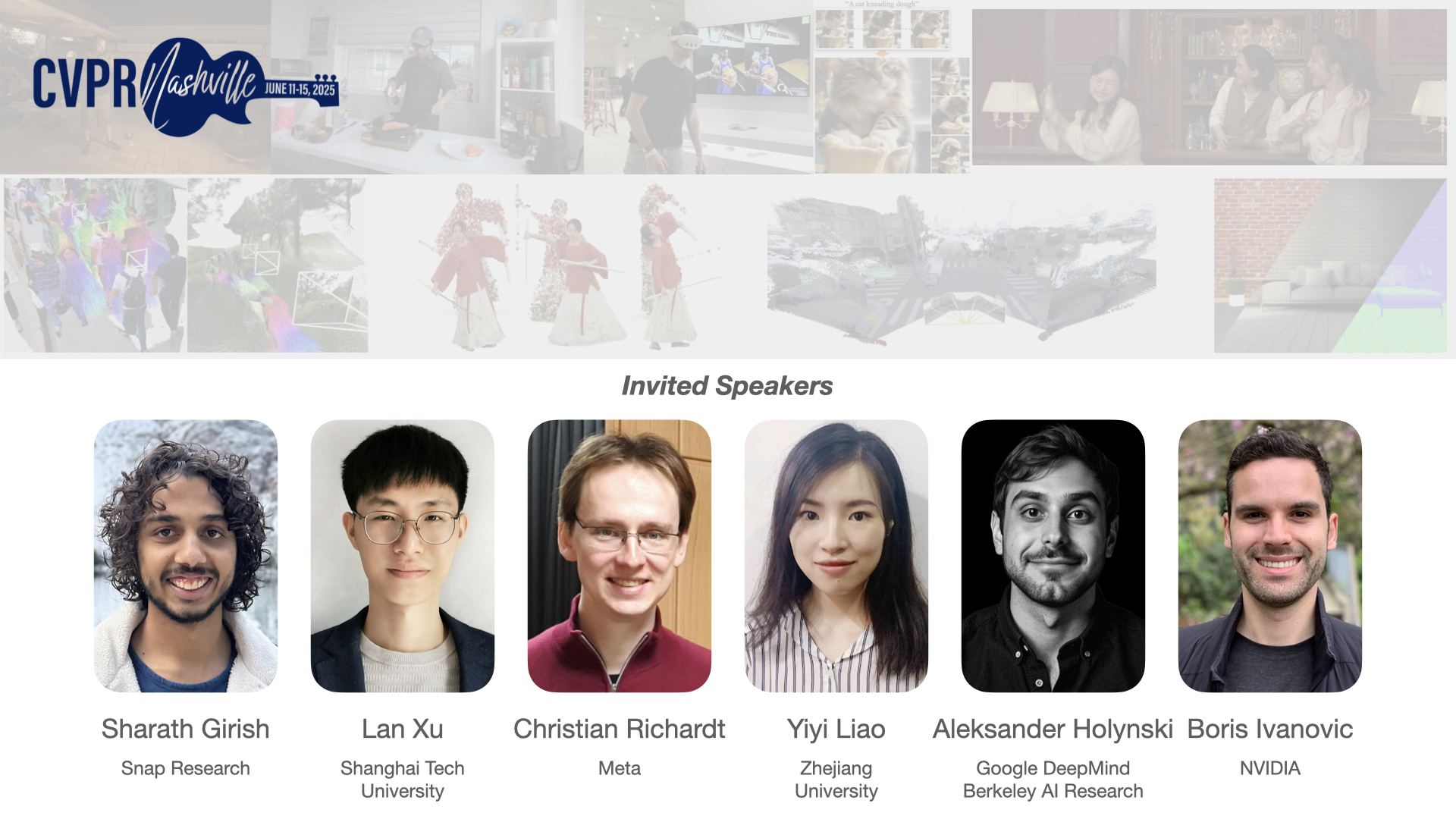

Speakers

Snap Research

Towards efficient real-time streaming of volumetric video

In this talk, I will discuss the importance of learning efficient representations for volumetric video and their impact on real-world applications. I will provide an overview of current techniques aimed at enabling real-time streaming while preserving high visual fidelity and compressibility. The discussion will cover key trade-offs between storage requirements, training times, and rendering speeds. I will then conclude by highlighting open challenges and future directions in the field, for enabling interactive and immersive volumetric video experiences.

Sharath Girish is a research scientist at Snap Inc. He received his Ph.D. from the department of Computer Science at the University of Maryland, College Park, advised by Prof. Abhinav Shrivastava. His research interests include machine learning and computer vision. Currently, his research focuses on efficiency, compression and acceleration of deep networks and different types of data such as images/videos/3D scenes.

Shanghai Tech University

Dynamic 4D Humans

Talk description coming soon!

Lan Xu is a Tenure-track Assistant Professor of SIST in ShanghaiTech University. He received his Ph.D from HKUST in 2020 supervised by Prof. Lu Fang and B.E. from ZJU in 2015. His research focuses on computer vision and computer graphics. His goal is to capture, perceive and understand the human-centric dynamic and static scenes in the complex real world. His research interests include performance capture, 3D/4D reconstruction, scene understanding, and artificial reality.

Meta

Immersive & Dynamic Spaces

My talk dives into the broad spectrum of techniques for capturing, reconstructing and rendering immersive and dynamic spaces, from the first immersive visual experiences more than 200 years ago, all the way to the most recent real-time neural rendering and 3D/4D Gaussian splatting running in virtual reality headsets. I will present the key techniques of the last 10 years and discuss the insights that helped them advance the state of the art and propel the field forward. Join me to learn more about 360° and omni-directional stereo video, lightfield video, neural 3D video, immersive, audiovisual and relightable spaces for virtual reality, using unconventional time-of-flight sensors for capturing dynamic scenes, as well as an outlook on remaining challenges and future work.

Christian Richardt is a Research Scientist at Meta Reality Labs in Zurich, Switzerland, and previously at the Codec Avatars Lab in Pittsburgh, USA. He was previously a Reader (=Associate Professor) and EPSRC-UKRI Innovation Fellow in the Visual Computing Group and the CAMERA Centre at the University of Bath. His research interests cover the fields of image processing, computer graphics and computer vision, and his research combines insights from vision, graphics and perception to reconstruct visual information from images and videos, to create high-quality visual experiences with a focus on novel-view synthesis.

Zhejiang University

Compression and Standardization of Gaussian Splats

Recent progress in novel view synthesis has made it easier to create high-quality volumetric videos from real-world images, bringing photorealistic immersive media within reach for broad applications. Among these, 3D Gaussian Splatting enables real-time rendering but comes with a substantial memory cost compared to methods like NeRF, highlighting an inherent trade-off between speed and storage. To address this, efficient compression techniques are critical for reducing memory usage while maintaining real-time rendering. In this tutorial, I will first provide an overview of existing compression approaches for Gaussian Splats. I will then introduce 4D scene representations and corresponding compression techniques for volumetric video built on Gaussian Splatting. Finally, I will present recent exploration efforts within MPEG, the international standardization body, aimed at developing standardized Gaussian Splatting codecs for broad industry adoption.

Yiyi Liao is an assistant professor in Zhejiang University, leading the X-Dimensional Representations Lab (X-D Lab). Before that, she was a Postdoc in Autonomous Vision Group at the University of T ̈ubingen and the MPI for Intelligent Systems, working with Prof. Andreas Geiger. She received her Ph.D. in Control Science and Engineering from Zhejiang University in June 2018 and the B.S. degree from Xi’an Jiaotong University in 2013. Her research interest lies in 3D computer vision, including scene understanding, 3D reconstruction and 3D generative models.

Google DeepMind / Berkeley AI Research

Generating Volumetric Video from Monocular Inputs

Talk description coming soon!

Aleksander Holynski is a senior research scientist at Google DeepMind and a postdoctoral scholar at Berkeley AI Research, working with Alyosha Efros and Angjoo Kanazawa. He received a PhD in Computer Science from the University of Washington, where he was advised by Steve Seitz, Brian Curless, and Rick Szeliski, and a B.S. with High Honors from the University of Illinois at Urbana-Champaign. His research focuses on computer vision, computer graphics, machine learning, and generative models. His work has been recognized with best paper awards at ICCV 2023 and CVPR 2024.

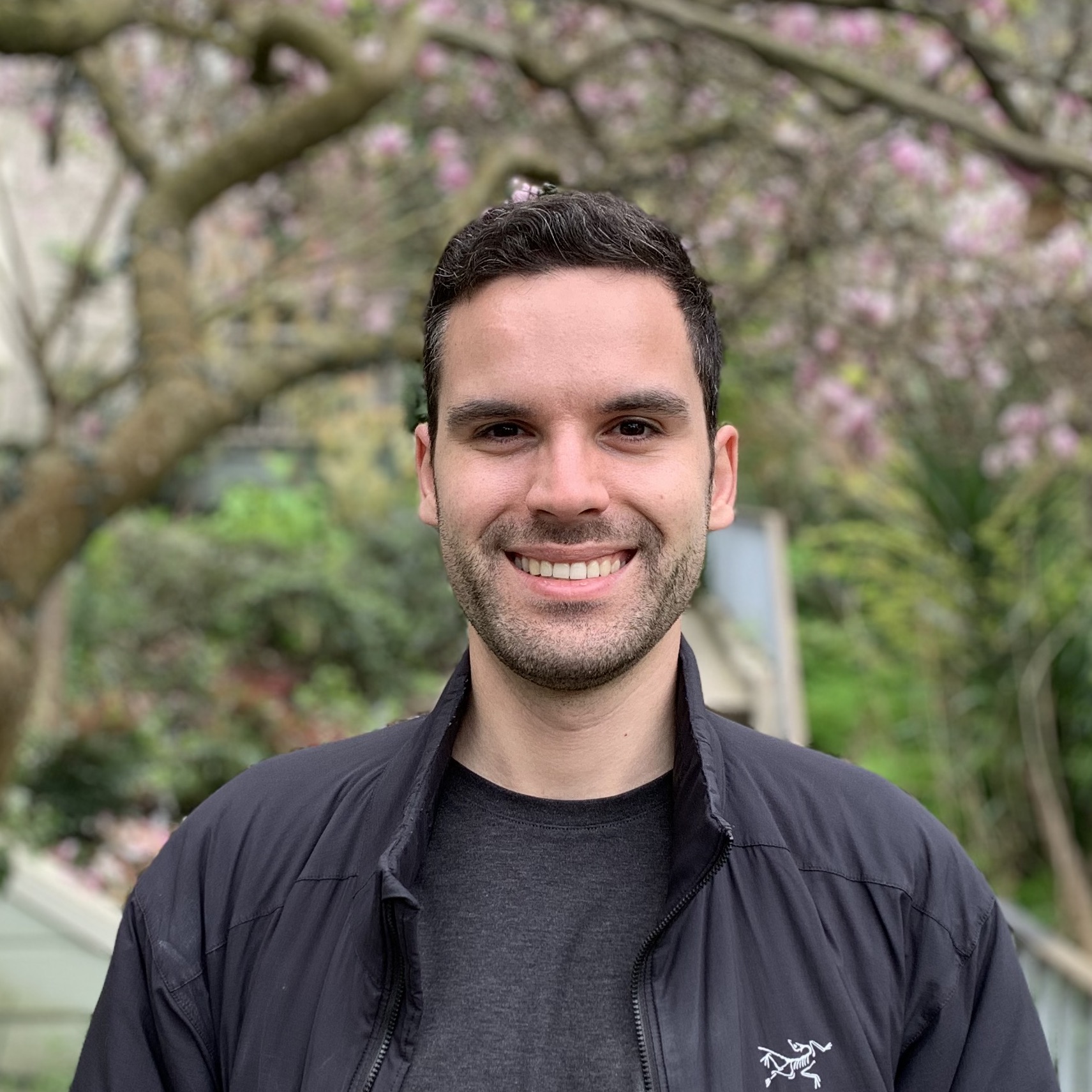

NVIDIA

Volumetric Video in Autonomous Vehicles

In this talk, we’ll discuss the transformative role of volumetric video in autonomous driving, spanning simulation, inference, and safety validation. We will first discuss how volumetric video enables high-fidelity, interactive closed-loop simulation by reconstructing dynamic, real-world 3D scenes. We’ll then explore how volumetric representations can be integrated into the autonomy stack to support efficient, geometry-aware inference. Finally, we’ll show how these representations enable scalable, data-driven safety validation, leveraging closed-loop simulation to reduce the amount of expensive real-world AV testing needed prior to deployment.

Boris Ivanovic is a Senior Research Scientist and Manager in NVIDIA’s Autonomous Vehicle Research Group. His research interests include novel end-to-end AV architectures, sensor and traffic simulation, AI safety, and the thoughtful integration of foundation models in AV development. Prior to joining NVIDIA, he received his Ph.D. in Aeronautics and Astronautics under the supervision of Marco Pavone in 2021 and an M.S. in Computer Science in 2018, both from Stanford University. He received his B.A.Sc. in Engineering Science from the University of Toronto in 2016.

Organizers

NVIDIA Research

NVIDIA Research

Snap Research

Google DeepMind / Berkeley AI Research

NVIDIA